ChatGPT is making your job obsolete – or is it?

By this point, you’ll probably have heard quite a lot about ChatGPT. Since its launch in November last year, OpenAI’s new AI chatbot has been the focus of breathless news coverage and wild social media speculation about how it’s going to change, well, everything.

ChatGPT, you see, isn’t just a tipping point for AI or the start of the AI revolution. It’s also going to disrupt everything from Google’s search business to college essays, while making lawyers and programmers redundant. And if we step back and take a broader view, the implications become even scarier – the entirety of white-collar work is apparently at risk.

Such is the impact of ChatGPT that Microsoft is even hoping it can do the impossible and make Bing a viable challenger to Google. In fact, they’re so optimistic about ChatGPT’s capacities to revolutionise web search, they’ve already funnelled billions of dollars into funding OpenAI’s activities.

If we push out from the safer shores of mainstream media coverage and into the wild waters of social media tech influencers, the prospects for the imminent AI takeover are even more extreme. Apparently, ChatGPT heralds the end of coding, higher education, and writing of any kind within the next three years.

While some of these speculations are, to be clear, palpably absurd, they are also everywhere. And that in itself is telling. The fact that ChatGPT has triggered such a collective self-reckoning across almost every social, cultural and economic sphere tells us quite a lot about the time we’re living in.

It’s not just that the tech sector is in a major downturn and looking for the next big thing to revive its flagging fortunes – although this is obviously a factor. The great AI furore of the past year is also inseparable from all those post-pandemic uncertainties about what the future holds – from the changing nature of work to the growing climate crisis, from economic turmoil to spiralling geopolitical tensions.

In this context, a new technology that seems “indistinguishable from magic” (to borrow Arthur C. Clarke’s much-cited phrase) is bound to attract our hopes and fears like metal filings to a magnet.

It’ll likely be quite some time before we truly understand the implications of generative AI. We’re certainly a long way from being able to determine whose jobs it will or won’t be taking. But in the short term, we can ask a simpler and more pragmatic question: is ChatGPT really the game-changing innovation it’s made out to be? And should you be immediately rushing to take advantage of it for your own day-to-day tasks?

Of course, plenty of people already are experimenting with ChatGPT – and are more than willing to share their results. ChatGPT has been used for ad copy, legal briefs, cover letters, real estate listings, and even scientific papers (though not without controversy).

But despite the obvious enthusiasm, there are some dissenting voices. In December, The Atlantic published a piece by the game designer and tech critic Ian Bogost titled “ChatGPT is Dumber Than You Think.” His takeaway was simple: “treat it like a toy, not a tool”.

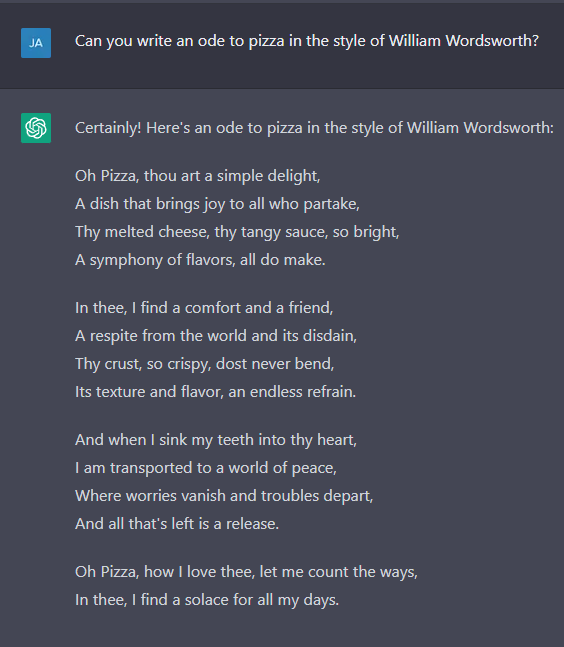

It might seem like Bogost and other critics are just trying to spoil the fun, but if you spend a little time with ChatGPT – and take a closer look at how it works – it becomes clear that they really have a point. When you start to look past the seemingly magical experience of getting a chatbot to write, say, a poetic ode to the wonders of pizza, the reality is a bit less impressive.

In fact, it’s quickly becoming clear that ChatGPT is better treated as a kind of silly parlour trick to amuse your friends rather than something you can seriously use as part of your daily working life – for the moment, anyway.

To illustrate the point, let’s just take a look at some of the fairly major problems that have reared their heads in the few months since ChatGPT was launched.

1. It gets basic things wrong. Like, all the time.

While ChatGPT was quickly heralded as the death knell for Google’s search supremacy, it was just as quickly discovered that it’s not the most trustworthy source of information.

Which is a generous way of saying that it frequently makes huge, baffling errors when you ask it for simple information.

The funniest example can be found in this Twitter thread (warning: it features some NSFW language), in which ChatGPT gets confused about what a mammal is. Mashable, meanwhile, documented a whole range of mistakes they uncovered while testing the software, including the chatbot getting basic historical and geographical facts wrong for no obvious reason.

And when it comes to maths questions, ChatGPT is similarly inconsistent.

The big issue here is not just that ChatGPT is wrong – it’s that it’s confidently wrong. It gives you incorrect information with just the same level of self-assuredness as it does when it’s giving you the right answer. Which means that there’s no way to tell unless you check yourself – by Googling, for instance.

At which point, it’s hard to see why you’d bother going to ChatGPT in the first place. As Wired magazine put it, from this perspective ChatGPT is just a “superficially eloquent and knowledgeable chatbot that generates untruths with confidence”.

I decided to check this out myself by asking the chatbot a few questions about subjects that I already know a little bit about. While it was often able to give me the correct answer to my questions, it also threw out some inexplicable falsehoods from time to time.

For instance, when I asked it about a novel by the prominent American writer Don DeLillo, it first claimed to know nothing about it. Then it got the answer completely wrong. On further probing, it simply contradicted itself, and then eventually gave the right answer without acknowledging its previous mistakes.

Similarly, when I asked it to name the artist responsible for a work called “Twittering Machine” (the correct answer is Paul Klee, a well-known German artist), it named a different German artist from the same period for no apparent reason. When I pointed out that it was wrong, it at least had the good grace to ask for help – but does this now mean it’s now part of my job to help train an errant AI?

All in all, not an impressive performance for a supposed Google alternative. Indeed, for a variety of reasons we’ll get into below, most people seriously involved in AI research will tell you that using it to replace a search engine is far harder than might initially appear – whatever Microsoft might hope.

Of course, you can argue that the most exciting thing about ChatGPT isn’t its potential as a replacement for Google – it’s as a replacement for content writers! (Obviously, I have a vested interest here, but let’s set that aside for now.)

But here too ChatGPT’s factual inaccuracies can be a major problem. Let’s say you need to write a few paragraphs for a short blog post and decide to turn to ChatGPT to make your life a little easier. Great, it’ll churn something out in a few seconds, and it’ll probably sound decent… But now you’re going to have to make sure it’s not made any glaring factual errors or misrepresented your chosen topic in some fundamental way. Which, needless to say, takes time.

In the end, you might have sped up the actual creative part of your job, but you’ve also given yourself a whole new one: supervising an unruly machine. Is this really a big improvement?

2. Oh, and it gets more complicated things wrong, too!

As you might expect, things don’t get any better for ChatGPT if you give it something more complex to do than just giving you basic facts. Like writing code, for instance.

When announcing ChatGPT, OpenAI were eager to tout its usefulness for programmers who need help debugging code. And following its launch, there was a great deal of excitement about just how much it seemed to be able to do, including writing code from scratch using simple written instructions.

Nevertheless, it didn’t take long for people to realise that ChatGPT was no more reliable at writing code than it was when naming the second-largest country in Central America. Within weeks, Stack Overflow – a Q&A website for programmers – was forced to ban users from posting answers from ChatGPT because of their tendency to be flat-out wrong.

In its statement explaining the issue, the site noted that the key problem was they couldn’t rely on people to verify the answers they were getting from ChatGPT. This lack of due diligence meant the site was being flooded with false information, eroding the trust and sense of mutual respect that was essential for the site to operate.

After all, if you can’t expect the person answering your question to actually know whether their answer is right or not, why would you bother asking the question in the first place?

3. It doesn’t know about anything that happened in the last 18 months. (Hint: A lot happened.)

While ChatGPT may make mistakes about things it should in theory be able to get right, there’s also a whole swathe of stuff it simply will not know. And this includes anything that’s happened since September 2021.

This is because, despite the way it sometimes gets talked about, ChatGPT doesn’t “know” anything in the familiar sense. It’s less like a store of information than it is an elaborate synthesiser that can remix the texts it has been exposed to – its “training data”.

This training data is extensive, impressively so. But it’s also limited. And one of its major limitations is that it only includes material produced up to a specific date. If anything happened after that, it won’t be able to factor it into its responses. In ChatGPT’s case, its training data doesn’t extend past September 2021.

As you’ll probably be aware, the past eighteen months have been, well, pretty eventful. The fact that ChatGPT is entirely ignorant of this is a major problem if you’re dealing with any topic that relies on up-to-date information.

For instance, let’s say you’re getting interested in the metaverse and you want to write a short piece about it. You turn to ChatGPT for some initial research, and ask it who the biggest metaverse companies are.

A decent enough answer, up to a point. That point being, of course, Facebook’s rebranding as Meta in October 2021, which ChatGPT knows nothing about. And while some may prefer to be blissfully unaware of Zuckerberg’s metaverse ambitions, it’s pretty much essential information for anyone writing about the topic.

4. It likes to just make things up sometimes.

We’ve established that ChatGPT gets things wrong, and that’s obviously a big problem. But it also does something more insidious – it actively makes things up. And in many ways, this is much worse.

In fact, ChatGPT isn’t unique here. This is actually a common attribute of generative AI models – so common in fact that there’s a specific name for it in AI research: hallucination.

I decided to try and see how easy it was to make ChatGPT start making things up. And one area that seems to trigger ChatGPT’s imagination is asking it for articles on a particular subject – something that may be of interest to university students, who are apparently going to turn en masse to ChatGPT to write their essays.

I asked ChatGPT for some articles on the American composer John Cage to test this out. (Full disclosure: I wrote a doctoral thesis on Cage somewhere in the distant past.) Its response was superficially pretty helpful.

Great! ChatGPT has given me a useful starting point for doing some research on John Cage! What an amazing time-saver!

Oh wait, no. It turns out that not a single one of these articles actually exists.

Part of what makes this troubling rather than just funny is how believable the responses are. The authors are all real scholars who have written about Cage, and the journals are real too. Even the titles are believable. All in all, these articles genuinely could exist. Unfortunately, they don’t.

ChatGPT also doesn’t do too well when called out on its lies. I asked it for the last line of Herman Melville’s famous whale-hunting novel “Moby-Dick”, which it (surprise!) got wrong. Instead, it offered me a different line from near the end of the book (which it also falsely attributed to the narrator, Ishmael). When challenged, the chatbot just started to invent things wholesale, and the exchange got very confusing.

I’m not going to deny that there’s something fundamentally tragic about arguing with an AI chatbot. But in my defense, it also helps to highlight an important fact: chatbots like ChatGPT are not actually drawing on a bank of information to provide you with a correct answer to your question. Instead, they are combining words based on statistical models of probability drawn from their training data.

As a result, it has no way to distinguish between “true” and “false” – in fact, it can’t actually apply these concepts at all, because it can’t think. Instead, it generates stuff that its model suggests is a plausible answer to the question it’s being asked. Unfortunately, plausible doesn’t equate to correct – in fact, it can often mean convincing but false.

There’s also a practical question here. If ChatGPT can’t correct its own errors even when challenged, then you’ve got no choice but to navigate away to get some answers. And the idea that you’ll routinely need to switch over to Google or some other search engine to figure out what’s going on rather undercuts the idea that ChatGPT is actually making our lives that much easier.

Yes, Microsoft will be incorporating ChatGPT into its Bing search engine in the coming weeks. I guess that might make it simpler to fact-check ChatGPT when it fails at basic arithmetic or simple requests for information, as the otherwise-enthusiastic New York Times report was forced to concede it still does in its exciting new Bing-integrated form. But again, is this really the exciting future we’ve been promised – adding “AI fact-checker” to our already overloaded job descriptions?

5. Its safeguards rely on traumatising underpaid workers in Kenya.

Getting things wrong is one thing, but providing dangerous or harmful responses is quite another. And wouldn’t you guess, ChatGPT has managed to cover both bases!

OpenAI is aware that it’s not great to have their AI chatbot telling people how to commit crimes or generating offensive content. As a result, they have built in some safeguarding measures to prevent people from asking it to do unethical things.

However, the implementation of ChatGPT’s safeguards is reliant on some very low-tech, labour-intensive and, well, brutal means. It was revealed in January that OpenAI was paying workers in Kenya less than $2 per hour to manually review and categorise extreme and hateful content to help train the chatbot.

Yes, that meant sending the workers some of the most horrifying texts the web has to offer and asking them to find the appropriate label for them.

In many respects, this is just par for the course for large-scale tech companies. Facebook, for instance, was mired in controversy last year for using the exact same Kenyan company to help moderate harmful content on the platform. But if you’re at all ethically minded, the implications are deeply troubling. The Kenyan activist and political analyst Nanjala Nyabola has recently described the way that “sweatshops are making the digital age work”. What appears to us as a smooth and seamless process requires arduous, underpaid labour often carried out by people in the Global South.

Before we get too caught up in images of an ultra-intelligent machine helping to solve all our problems at lightspeed, we should probably remind ourselves of the very real suffering that is going on behind the scenes to make this all work. Or, to make it sort of work, sometimes.

6. They’re also easy to get around.

Now, the fact that ChatGPT’s safeguarding measures are causing psychological damage to low-paid workers might be horrifying in itself, but you may also like to know that they don’t really work very well! Isn’t that great?!

Because, as it turns out, bypassing the chatbot’s content filters is extremely easy – and in fact, there are a whole variety of ways to do so. The AI researcher Davis Blalock posted an extensive Twitter thread documenting all the many different options you can choose from if you’d like to get around the safeguards.

As you can see, this can be as simple as just telling it to ignore its previous instructions. You can also engage in roleplay or ask it to go into “opposite mode”. Just choose your preferred method and away you go.

Of course, OpenAI have been proactively trying to patch out these issues as they’re discovered. But it’s very much a case of letting people work out how to do bad stuff and then trying to stop them after the fact. Which isn’t necessarily the safest or most ethical approach.

This is actually part of the reason why OpenAI were able to cause such a stir with ChatGPT. Google and Meta have both been working on AI chatbots for a long time, but both have been far more cautious about what they release to the public. As Meta’s chief AI scientist recently noted, the AI powering ChatGPT isn’t particularly advanced or revolutionary. It’s just that “Google and Meta both have a lot to lose by putting out systems that make stuff up”.

Ultimately, neither company can really risk the PR nightmare that a rogue chatbot could represent. OpenAI, on the other hand, don’t really have a brand to protect, nor do they have shareholders they need to placate. And that’s empowered them to be a little, let’s say, cavalier when it comes to releasing potentially harmful products to tens of millions of people.

A few final thoughts

Generative AI has leapt into public view seemingly from nowhere, and with the tech giants now racing to take the lead, it’s clear that we’ll be seeing some pretty rapid advances in the utility and reliability of this kind of software in the coming months and years. No doubt OpenAI are working hard to solve some of the problems we’ve outlined in this article, and Google and Meta are both rushing to launch their own rival chatbots that may well avoid some of these pitfalls.

But the reality is that, for the moment, ChatGPT is not going to take your job. It might not even make it any easier or more efficient. At best, it’s likely to give you a different kind of work to do – writing and revising prompts, fact-checking answers, and getting mired in pointless arguments with a piece of software that, despite appearances, cannot think, reflect, or correct itself. Which, if you ask me, doesn’t sound all that appealing.

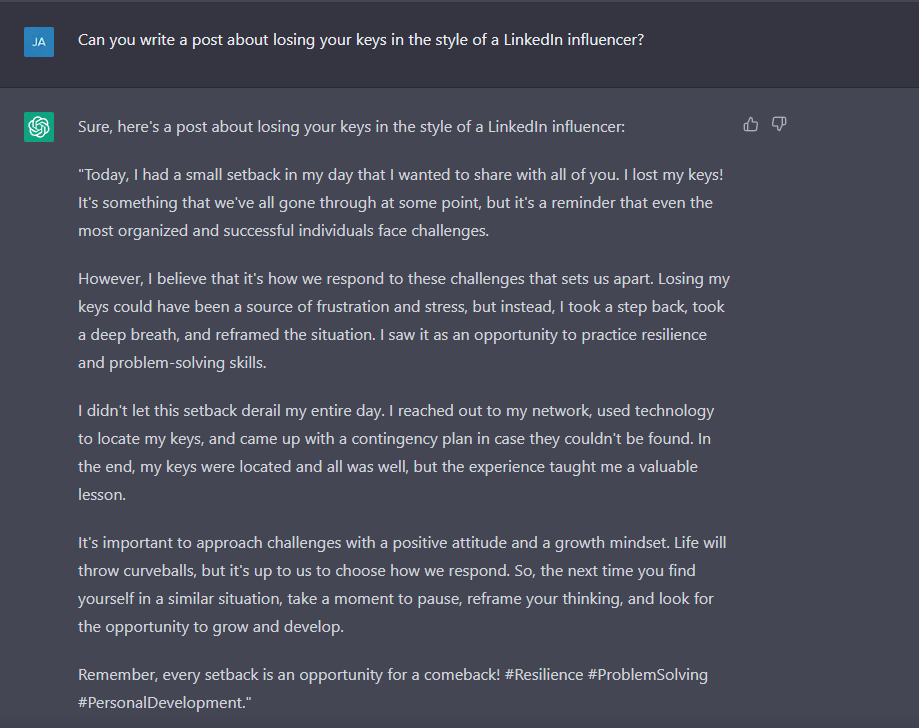

But on the other hand, if you just want to make it write funny little parodies – like a post about losing your keys written in the style of a LinkedIn influencer, for example – then there’s definitely some fun to be had.

Truly inspirational, I’m sure we can all agree.